[ad_1]

Deep studying is now getting used to translate between languages, predict how proteins fold, analyze medical scans, and play games as complex as Go, to call only a few purposes of a method that’s now turning into pervasive. Success in these and different realms has introduced this machine-learning method from obscurity within the early 2000s to dominance at present.

Though deep studying’s rise to fame is comparatively current, its origins will not be. In 1958, again when mainframe computer systems crammed rooms and ran on vacuum tubes, data of the interconnections between neurons within the mind impressed

Frank Rosenblatt at Cornell to design the primary synthetic neural community, which he presciently described as a “pattern-recognizing gadget.” However Rosenblatt’s ambitions outpaced the capabilities of his period—and he knew it. Even his inaugural paper was compelled to acknowledge the voracious urge for food of neural networks for computational energy, bemoaning that “because the variety of connections within the community will increase…the burden on a standard digital laptop quickly turns into extreme.”

Thankfully for such synthetic neural networks—later rechristened “deep studying” after they included further layers of neurons—a long time of

Moore’s Law and different enhancements in laptop {hardware} yielded a roughly 10-million-fold increase within the variety of computations that a pc might do in a second. So when researchers returned to deep studying within the late 2000s, they wielded instruments equal to the problem.

These more-powerful computer systems made it attainable to assemble networks with vastly extra connections and neurons and therefore higher capacity to mannequin complicated phenomena. Researchers used that capacity to interrupt file after file as they utilized deep studying to new duties.

Whereas deep studying’s rise could have been meteoric, its future could also be bumpy. Like Rosenblatt earlier than them, at present’s deep-learning researchers are nearing the frontier of what their instruments can obtain. To know why this may reshape machine studying, it’s essential to first perceive why deep studying has been so profitable and what it prices to maintain it that method.

Deep studying is a contemporary incarnation of the long-running development in synthetic intelligence that has been transferring from streamlined techniques based mostly on skilled data towards versatile statistical fashions. Early AI techniques had been rule based mostly, making use of logic and skilled data to derive outcomes. Later techniques integrated studying to set their adjustable parameters, however these had been often few in quantity.

Right this moment’s neural networks additionally be taught parameter values, however these parameters are a part of such versatile laptop fashions that—if they’re large enough—they turn into common perform approximators, that means they will match any sort of information. This limitless flexibility is the rationale why deep studying might be utilized to so many alternative domains.

The flexibleness of neural networks comes from taking the numerous inputs to the mannequin and having the community mix them in myriad methods. This implies the outputs will not be the results of making use of easy formulation however as a substitute immensely sophisticated ones.

For instance, when the cutting-edge image-recognition system

Noisy Student converts the pixel values of a picture into possibilities for what the thing in that picture is, it does so utilizing a community with 480 million parameters. The coaching to determine the values of such a lot of parameters is much more outstanding as a result of it was finished with only one.2 million labeled pictures—which can understandably confuse these of us who bear in mind from highschool algebra that we’re speculated to have extra equations than unknowns. Breaking that rule seems to be the important thing.

Deep-learning fashions are overparameterized, which is to say they’ve extra parameters than there are knowledge factors accessible for coaching. Classically, this may result in overfitting, the place the mannequin not solely learns basic traits but in addition the random vagaries of the info it was skilled on. Deep studying avoids this lure by initializing the parameters randomly after which iteratively adjusting units of them to raised match the info utilizing a technique known as stochastic gradient descent. Surprisingly, this process has been confirmed to make sure that the realized mannequin generalizes effectively.

The success of versatile deep-learning fashions might be seen in machine translation. For many years, software program has been used to translate textual content from one language to a different. Early approaches to this downside used guidelines designed by grammar consultants. However as extra textual knowledge turned accessible in particular languages, statistical approaches—ones that go by such esoteric names as most entropy, hidden Markov fashions, and conditional random fields—may very well be utilized.

Initially, the approaches that labored greatest for every language differed based mostly on knowledge availability and grammatical properties. For instance, rule-based approaches to translating languages equivalent to Urdu, Arabic, and Malay outperformed statistical ones—at first. Right this moment, all these approaches have been outpaced by deep studying, which has confirmed itself superior virtually in all places it is utilized.

So the excellent news is that deep studying supplies monumental flexibility. The unhealthy information is that this flexibility comes at an infinite computational value. This unlucky actuality has two components.

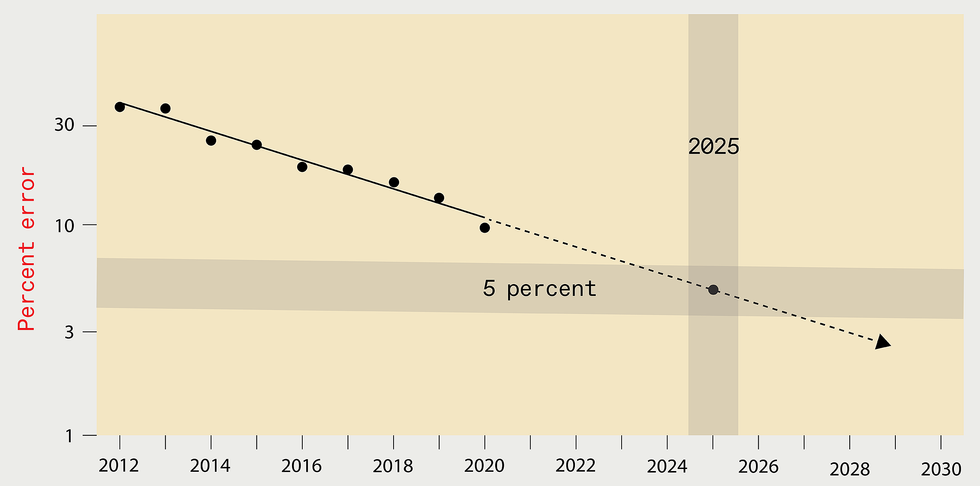

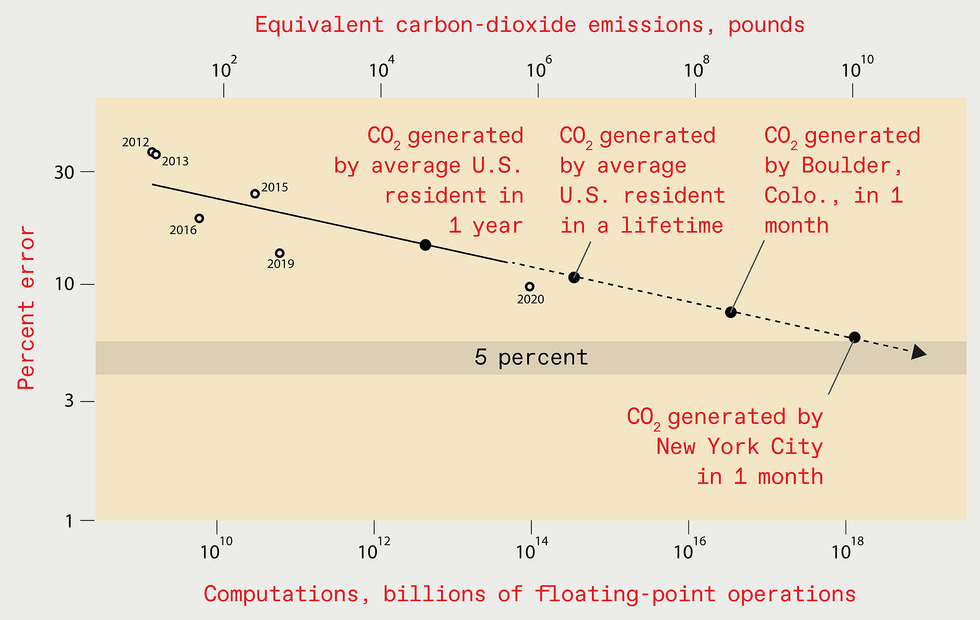

Extrapolating the positive factors of current years would possibly counsel that by

2025 the error stage in one of the best deep-learning techniques designed

for recognizing objects within the ImageNet knowledge set ought to be

lowered to simply 5 p.c [top]. However the computing assets and

vitality required to coach such a future system can be monumental,

resulting in the emission of as a lot carbon dioxide as New York

Metropolis generates in a single month [bottom].

SOURCE: N.C. THOMPSON, Okay. GREENEWALD, Okay. LEE, G.F. MANSO

The primary half is true of all statistical fashions: To enhance efficiency by an element of

okay, at the least okay2 extra knowledge factors should be used to coach the mannequin. The second a part of the computational value comes explicitly from overparameterization. As soon as accounted for, this yields a complete computational value for enchancment of at the least okay4. That little 4 within the exponent could be very costly: A ten-fold enchancment, for instance, would require at the least a ten,000-fold improve in computation.

To make the flexibility-computation trade-off extra vivid, contemplate a state of affairs the place you are attempting to foretell whether or not a affected person’s X-ray reveals most cancers. Suppose additional that the true reply might be discovered when you measure 100 particulars within the X-ray (usually known as variables or options). The problem is that we do not know forward of time which variables are essential, and there may very well be a really massive pool of candidate variables to think about.

The expert-system method to this downside can be to have people who find themselves educated in radiology and oncology specify the variables they suppose are essential, permitting the system to look at solely these. The flexible-system method is to check as most of the variables as attainable and let the system determine by itself that are essential, requiring extra knowledge and incurring a lot larger computational prices within the course of.

Fashions for which consultants have established the related variables are capable of be taught shortly what values work greatest for these variables, doing so with restricted quantities of computation—which is why they had been so well-liked early on. However their capacity to be taught stalls if an skilled hasn’t appropriately specified all of the variables that ought to be included within the mannequin. In distinction, versatile fashions like deep studying are much less environment friendly, taking vastly extra computation to match the efficiency of skilled fashions. However, with sufficient computation (and knowledge), versatile fashions can outperform ones for which consultants have tried to specify the related variables.

Clearly, you may get improved efficiency from deep studying when you use extra computing energy to construct larger fashions and practice them with extra knowledge. However how costly will this computational burden turn into? Will prices turn into sufficiently excessive that they hinder progress?

To reply these questions in a concrete method,

we recently gathered data from greater than 1,000 analysis papers on deep studying, spanning the areas of picture classification, object detection, query answering, named-entity recognition, and machine translation. Right here, we’ll solely talk about picture classification intimately, however the classes apply broadly.

Over time, lowering image-classification errors has include an infinite enlargement in computational burden. For instance, in 2012

AlexNet, the mannequin that first confirmed the ability of coaching deep-learning techniques on graphics processing items (GPUs), was skilled for 5 to 6 days utilizing two GPUs. By 2018, one other mannequin, NASNet-A, had minimize the error fee of AlexNet in half, nevertheless it used greater than 1,000 occasions as a lot computing to realize this.

Our evaluation of this phenomenon additionally allowed us to match what’s truly occurred with theoretical expectations. Concept tells us that computing must scale with at the least the fourth energy of the development in efficiency. In apply, the precise necessities have scaled with at the least the

ninth energy.

This ninth energy signifies that to halve the error fee, you may anticipate to want greater than 500 occasions the computational assets. That is a devastatingly excessive worth. There could also be a silver lining right here, nonetheless. The hole between what’s occurred in apply and what concept predicts would possibly imply that there are nonetheless undiscovered algorithmic enhancements that would tremendously enhance the effectivity of deep studying.

To halve the error fee, you may anticipate to want greater than 500 occasions the computational assets.

As we famous, Moore’s Regulation and different {hardware} advances have supplied huge will increase in chip efficiency. Does this imply that the escalation in computing necessities does not matter? Sadly, no. Of the 1,000-fold distinction within the computing utilized by AlexNet and NASNet-A, solely a six-fold enchancment got here from higher {hardware}; the remaining got here from utilizing extra processors or working them longer, incurring larger prices.

Having estimated the computational cost-performance curve for picture recognition, we will use it to estimate how a lot computation can be wanted to achieve much more spectacular efficiency benchmarks sooner or later. For instance, attaining a 5 p.c error fee would require 1019 billion floating-point operations.

Important work by students on the College of Massachusetts Amherst permits us to know the financial value and carbon emissions implied by this computational burden. The solutions are grim: Coaching such a mannequin would value US $100 billion and would produce as a lot carbon emissions as New York Metropolis does in a month. And if we estimate the computational burden of a 1 p.c error fee, the outcomes are significantly worse.

Is extrapolating out so many orders of magnitude an affordable factor to do? Sure and no. Definitely, you will need to perceive that the predictions aren’t exact, though with such eye-watering outcomes, they do not should be to convey the general message of unsustainability. Extrapolating this manner

would be unreasonable if we assumed that researchers would comply with this trajectory all the best way to such an excessive end result. We do not. Confronted with skyrocketing prices, researchers will both should give you extra environment friendly methods to unravel these issues, or they may abandon engaged on these issues and progress will languish.

Then again, extrapolating our outcomes just isn’t solely affordable but in addition essential, as a result of it conveys the magnitude of the problem forward. The forefront of this downside is already turning into obvious. When Google subsidiary

DeepMind skilled its system to play Go, it was estimated to have cost $35 million. When DeepMind’s researchers designed a system to play the StarCraft II video game, they purposefully did not attempt a number of methods of architecting an essential part, as a result of the coaching value would have been too excessive.

At

OpenAI, an essential machine-learning suppose tank, researchers not too long ago designed and skilled a much-lauded deep-learning language system called GPT-3 at the price of greater than $4 million. Although they made a mistake after they carried out the system, they did not repair it, explaining merely in a complement to their scholarly publication that “due to the cost of training, it wasn’t feasible to retrain the model.”

Even companies exterior the tech trade are actually beginning to draw back from the computational expense of deep studying. A big European grocery store chain not too long ago deserted a deep-learning-based system that markedly improved its capacity to foretell which merchandise can be bought. The corporate executives dropped that try as a result of they judged that the price of coaching and working the system can be too excessive.

Confronted with rising financial and environmental prices, the deep-learning neighborhood might want to discover methods to extend efficiency with out inflicting computing calls for to undergo the roof. If they do not, progress will stagnate. However do not despair but: A lot is being finished to handle this problem.

One technique is to make use of processors designed particularly to be environment friendly for deep-learning calculations. This method was broadly used during the last decade, as CPUs gave approach to GPUs and, in some instances, field-programmable gate arrays and application-specific ICs (together with Google’s

Tensor Processing Unit). Basically, all of those approaches sacrifice the generality of the computing platform for the effectivity of elevated specialization. However such specialization faces diminishing returns. So longer-term positive factors would require adopting wholly completely different {hardware} frameworks—maybe {hardware} that’s based mostly on analog, neuromorphic, optical, or quantum techniques. Up to now, nonetheless, these wholly completely different {hardware} frameworks have but to have a lot affect.

We should both adapt how we do deep studying or face a way forward for a lot slower progress.

One other method to lowering the computational burden focuses on producing neural networks that, when carried out, are smaller. This tactic lowers the fee every time you utilize them, nevertheless it usually will increase the coaching value (what we have described to date on this article). Which of those prices issues most will depend on the state of affairs. For a broadly used mannequin, working prices are the largest part of the full sum invested. For different fashions—for instance, those who continuously should be retrained— coaching prices could dominate. In both case, the full value should be bigger than simply the coaching by itself. So if the coaching prices are too excessive, as we have proven, then the full prices will probably be, too.

And that is the problem with the assorted techniques which were used to make implementation smaller: They do not cut back coaching prices sufficient. For instance, one permits for coaching a big community however penalizes complexity throughout coaching. One other entails coaching a big community after which “prunes” away unimportant connections. One more finds as environment friendly an structure as attainable by optimizing throughout many fashions—one thing known as neural-architecture search. Whereas every of those methods can supply vital advantages for implementation, the results on coaching are muted—definitely not sufficient to handle the issues we see in our knowledge. And in lots of instances they make the coaching prices larger.

One up-and-coming method that would cut back coaching prices goes by the title meta-learning. The concept is that the system learns on a wide range of knowledge after which might be utilized in lots of areas. For instance, moderately than constructing separate techniques to acknowledge canines in pictures, cats in pictures, and automobiles in pictures, a single system may very well be skilled on all of them and used a number of occasions.

Sadly, current work by

Andrei Barbu of MIT has revealed how arduous meta-learning might be. He and his coauthors confirmed that even small variations between the unique knowledge and the place you need to use it could actually severely degrade efficiency. They demonstrated that present image-recognition techniques rely closely on issues like whether or not the thing is photographed at a specific angle or in a specific pose. So even the easy activity of recognizing the identical objects in numerous poses causes the accuracy of the system to be practically halved.

Benjamin Recht of the College of California, Berkeley, and others made this level much more starkly, displaying that even with novel knowledge units purposely constructed to imitate the unique coaching knowledge, efficiency drops by greater than 10 p.c. If even small modifications in knowledge trigger massive efficiency drops, the info wanted for a complete meta-learning system could be monumental. So the good promise of meta-learning stays removed from being realized.

One other attainable technique to evade the computational limits of deep studying can be to maneuver to different, maybe as-yet-undiscovered or underappreciated kinds of machine studying. As we described, machine-learning techniques constructed across the perception of consultants might be rather more computationally environment friendly, however their efficiency cannot attain the identical heights as deep-learning techniques if these consultants can’t distinguish all of the contributing elements.

Neuro-symbolic strategies and different methods are being developed to mix the ability of skilled data and reasoning with the flexibleness usually present in neural networks.

Just like the state of affairs that Rosenblatt confronted on the daybreak of neural networks, deep studying is at present turning into constrained by the accessible computational instruments. Confronted with computational scaling that will be economically and environmentally ruinous, we should both adapt how we do deep studying or face a way forward for a lot slower progress. Clearly, adaptation is preferable. A intelligent breakthrough would possibly discover a approach to make deep studying extra environment friendly or laptop {hardware} extra {powerful}, which might permit us to proceed to make use of these terribly versatile fashions. If not, the pendulum will doubtless swing again towards relying extra on consultants to determine what must be realized.

From Your Website Articles

Associated Articles Across the Internet

[ad_2]

Source