[ad_1]

This month, Sony begins transport its first high-resolution event-based digicam chips. Atypical cameras seize full scenes at common intervals although a lot of the pixels in these frames do not change from one scene to a different. The pixels in event-based cameras—a know-how impressed by animal imaginative and prescient programs—solely react in the event that they detect a change within the quantity of sunshine falling on them. Subsequently, they devour little energy and generate a lot much less information whereas capturing movement particularly nicely. The 2 Sony chips—the 0.92 megapixel IMX636 and the smaller 0.33 megapixel IMX637—mix Prophesee’s event-based circuits with Sony’s 3D-chip stacking know-how to supply a chip with the smallest event-based pixels available on the market.

Prophesee CEO Luca Verre explains what comes subsequent for this neuromorphic know-how.

Luca Verre on…

The role of event-based sensors in cars

The long road to this milestone

Prophesee CEO and cofounder Luca Verre

Luca Verre: The scope is broader than simply industrial. Within the area of automotive, we’re very lively, in reality, in non-safety associated functions. In April we introduced a partnership with Xperi, which developed an in-cabin driver monitoring solution for autonomous driving. [Car makers want in-cabin monitoring of the driver to ensure they are attending to driving even when a car is autonomous mode.] Security-related functions [such as sensors for autonomous driving] shouldn’t be within the scope of the IMX636 as a result of it will require security compliancy, which this design shouldn’t be meant for. Nevertheless, there are a selection of OEM and Tier 1 suppliers which can be doing analysis on it, totally conscious that the sensor can’t be, as-is, put in mass manufacturing. They’re testing it as a result of they need to consider the know-how’s efficiency after which doubtlessly think about pushing us and Sony to revamp it, to make it compliant with security. Automotive security stays an space of curiosity, however extra longer-term. In any case, if a few of this analysis work results in a call for product improvement, it will require fairly a number of years [before it appears in a car].

IEEE Spectrum: What’s subsequent for this sensor?

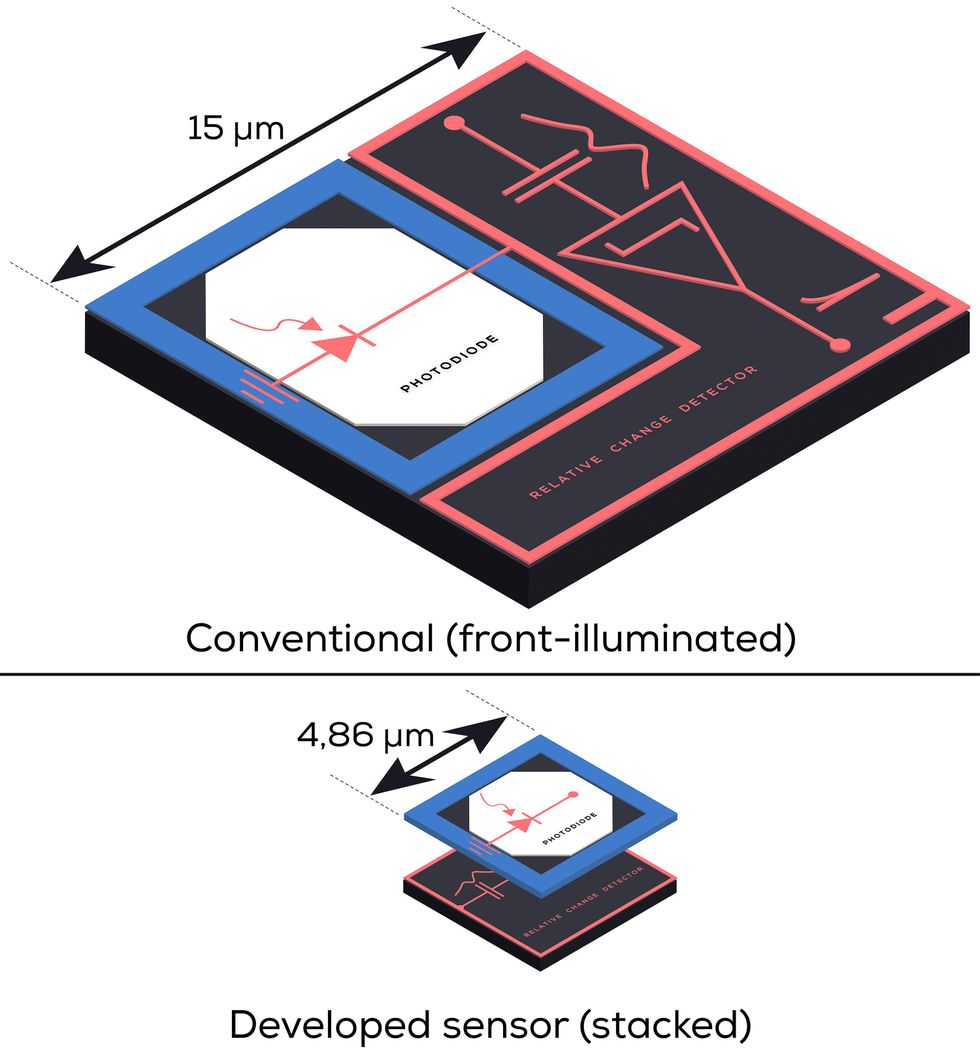

Luca Verre: For the subsequent era, we’re working alongside three axes. One axis is across the discount of the pixel pitch. Along with Sony, we made nice progress by shrinking the pixel pitch from the 15 micrometers of Era 3 all the way down to 4.86 micrometers with era 4. However, in fact, there may be nonetheless some giant room for enchancment by utilizing a extra superior know-how node or by utilizing the now-maturing stacking know-how of double and triple stacks. [The sensor is a photodiode chip stacked onto a CMOS chip.] You could have the photodiode course of, which is 90 nanometers, after which the clever half, the CMOS half, was developed on 40 nanometers, which isn’t essentially a really aggressive node. Going for extra aggressive nodes like 28 or 22 nm, the pixel pitch will shrink very a lot.

Standard versus stacked pixel designProphesee

The advantages are clear: It is a profit by way of price; it is a profit by way of lowering the optical format for the digicam module, which implies additionally discount of price on the system degree; plus it permits integration in units that require tighter area constraints. After which in fact, the opposite associated profit is the truth that with the equal silicon floor, you may put extra pixels in, so the decision will increase.The event-based know-how shouldn’t be following essentially the identical race that we’re nonetheless seeing within the standard [color camera chips]; we aren’t taking pictures for tens of tens of millions of pixels. It is not mandatory for machine imaginative and prescient, except you think about some very area of interest unique functions.

The second axis is across the additional integration of processing functionality. There is a chance to embed extra processing capabilities contained in the sensor to make the sensor even smarter than it’s at this time. At this time it is a good sensor within the sense that it is processing the modifications [in a scene]. It is also formatting these modifications to make them extra appropriate with the traditional [system-on-chip] platform. However you may even push this reasoning additional and consider doing among the native processing contained in the sensor [that’s now done in the SoC processor].

The third one is expounded to energy consumption. The sensor, by design, is definitely low-power, but when we need to attain an excessive degree of low energy, there’s nonetheless a approach of optimizing it. In case you have a look at the IMX636 gen 4, energy shouldn’t be essentially optimized. In reality, what’s being optimized extra is the throughput. It is the potential to truly react to many modifications within the scene and have the ability to accurately timestamp them at extraordinarily excessive time precision. So in excessive conditions the place the scenes change rather a lot, the sensor has an influence consumption that’s equal to standard picture sensor, though the time precision is far larger. You may argue that in these conditions you’re working on the equal of 1000 frames per second and even past. So it is regular that you just devour as a lot as a ten or 100 frame-per-second sensor.[A lower power] sensor could possibly be very interesting, particularly for shopper units or wearable units the place we all know that there are functionalities associated to eye monitoring, consideration monitoring, eye lock, which can be changing into very related.

IEEE Spectrum: Is attending to decrease energy only a query of utilizing a extra superior semiconductor know-how node?

Luca Verre: Definitely utilizing a extra aggressive know-how will assist, however I feel marginally. What is going to considerably assistance is to have some wake-up mode program within the sensor. For instance, you may have an array the place primarily just a few lively pixels are all the time on. The remainder are totally shut down. After which when you could have reached a sure important mass of occasions, you get up every thing else.

IEEE Spectrum: What is the journey been like from idea to industrial product?

Luca Verre: For me it has been a seven 12 months journey. For my co-founder, CTO Christoph Posch, it has been even longer as a result of he began on the analysis in 2004.To be sincere with you, after I began I believed that the time to market would have been shorter. And over time, I spotted that [the journey] was far more advanced, for various causes. The primary and most vital motive was the shortage of an ecosystem. We’re the pioneers, however being the pioneers additionally has some drawbacks. You might be alone in entrance of everybody else, and that you must carry pals with you, as a result of as a know-how supplier, you present solely part of the answer. After I was pitching the story of Prophesee on the very starting, it was a narrative of a sensor with big profit. And I used to be pondering, naively to be sincere, that the advantages have been so evident and simple that everybody would soar to it. However in actuality, though everybody was , they have been additionally seeing the problem to combine it—to construct an algorithm, to place the sensor contained in the digicam module, to interface with a system on chip, to construct an software. What we did with Prophesee over time was to work extra on the system degree. In fact, we stored growing the sensor, however we additionally developed increasingly software program property.

By now, greater than 1,500 distinctive customers are experimenting with our software program. Plus we applied an analysis digicam and improvement package, by connecting our sensor to an SoC platform. At this time we’re capable of give to the ecosystem not solely the sensor however far more than that. We may give them instruments that may allow them to make the advantages clear.The second half is extra basic to the know-how. Once we began seven years in the past, we had a chip that was big; it was a 30-micrometer pixel pitch. And naturally, we knew that the trail to enter excessive quantity functions was very lengthy, and we wanted to search out this path utilizing a stacked know-how. And that is the rationale why, 4 years in the past, we satisfied Sony to work collectively. Again then, the primary backside-illumination 3D stacking know-how was changing into out there, but it surely was not likely accessible. [In backside illumination, light travels through the back of the silicon to reach photosensors on the front.

Sony’s 3D stacking technology moves the logic that reads and controls the pixels to a separate silicon chip, allowing pixels to be more densely packed.] The 2 largest picture sensor corporations, Sony and Samsung, have their very own course of in-house. [Others’ technologies] should not as superior as the 2 market leaders. However we managed to develop a relationship with Sony, achieve entry to their know-how, and manufactured the primary industrial grade, commercially out there, backside-illumination 3D stack event-based sensor that has a dimension that is appropriate with bigger quantity shopper units.We knew from the start that this must performed, however the path to achieve the purpose was not essentially clear. Sony would not do that fairly often. The IMX636 is the one [sensor] that Sony has co-developed with an exterior accomplice. This, for me, is a motive for delight, as a result of I feel they believed in us—in our know-how and our group.

[ad_2]

Source