[ad_1]

Image your favourite {photograph}, say, of an out of doors celebration. What’s within the image that you just care about most? Is it your pals who had been current? Is it the meals you had been consuming? Or is it the superb sundown within the background that you just did not discover on the time you took the image, however appears to be like like a portray?

Now think about which of these particulars you’d select to maintain when you solely had sufficient space for storing for a kind of options, as an alternative of the whole photograph.

Why would I trouble to do this, you ask? I can simply ship the entire image to the cloud and maintain it ceaselessly.

That, nonetheless, is not actually true. We reside in an age through which it is low cost to take pictures however will finally be pricey to retailer them en masse, as backup providers set limits and start charging for overages. And we like to share our pictures, so we find yourself storing them in a number of locations. Most customers do not give it some thought, however each picture posted to Fb, Instagram or TikTok is compressed earlier than it reveals up in your feed or timeline. Pc algorithms are consistently making decisions about what visible particulars matter, and, based mostly on these decisions, producing lower-quality pictures that take up much less digital house.

These compressors goal to protect sure visible properties whereas glossing over others, figuring out what visible info may be thrown away with out being noticeable. State-of-the-art picture compressors—like those ensuing within the ubiquitous

JPEG information that all of us have floating round on our onerous drives and shared albums within the cloud—can scale back picture sizes between 5 and 100 instances. However once we push the compression envelope additional, artifacts emerge, together with blurring, blockiness, and staircase-like bands.

Nonetheless, right now’s compressors present fairly good financial savings in house with acceptable losses in high quality. However, as engineers, we’re educated to ask if we are able to do higher. So we determined to take a step again from the usual picture compression instruments, and see if there’s a path to higher compression that, to this point, hasn’t been extensively traveled.

We began our effort to enhance picture compression by contemplating the adage: “an image is price a thousand phrases.” Whereas that expression is meant to indicate {that a} thousand phrases is quite a bit and an inefficient solution to convey the data contained in an image, to a pc, a thousand phrases is not a lot information in any respect. In actual fact, a thousand digital phrases comprise far fewer bits than any of the pictures we generate with our smartphones and sling round every day.

So, impressed by the aphorism, we determined to check whether or not it actually takes a couple of thousand phrases to explain a picture. As a result of if certainly it does, then maybe it is potential to make use of the descriptive energy of human language to compress pictures extra effectively than the algorithms used right now, which work with brightness and colour info on the pixel stage slightly than trying to grasp the contents of the picture.

The important thing to this method is determining what points of a picture matter most to human viewers, that’s, how a lot they really care concerning the visible info that’s thrown out. We consider that

evaluating compression algorithms based mostly on theoretical and non-intuitive portions is like gauging the success of your new cookie recipe by measuring how a lot the cookie deviates from an ideal circle. Cookies are designed to style scrumptious, so why measure high quality based mostly on one thing utterly unrelated to style?

It seems that there’s a a lot simpler solution to measure picture compression high quality—simply ask some folks what they suppose. By doing so, we came upon that people are fairly nice picture compressors, and machines have a protracted solution to go.

Algorithms for lossy compression embody equations referred to as loss capabilities. These measure how intently the compressed picture matches the unique picture. A loss operate near zero signifies that the compressed and unique pictures are very related. The aim of lossy picture compressors is to discard irrelevant particulars in pursuit of most house financial savings whereas minimizing the loss operate.

We came upon that people are fairly nice picture compressors, and machines have a protracted solution to go.

Some loss capabilities focus on summary qualities of a picture that do not essentially relate to how a human views a picture. One basic loss operate, for instance, includes evaluating the unique and the compressed pictures pixel-by-pixel, then including up the squared variations in pixel values. That is definitely not how most individuals take into consideration the variations between two pictures. Loss capabilities like this one that do not mirror the priorities of the human visible system are likely to end in compressed pictures with apparent visible flaws.

Most picture compressors do take some points of the human visible system under consideration. The JPEG algorithm exploits the truth that the human visible system prioritizes areas of uniform visible info over minor particulars. So it typically degrades options like sharp edges. JPEG, like most different video and picture compression algorithms, additionally preserves extra depth (brightness) info than it does colour, because the human eye is rather more delicate to modifications in gentle depth than it’s to minute variations in hues.

For many years, scientists and engineers have tried to distill points of human visible notion into higher methods of computing the loss operate. Notable amongst these efforts are strategies to quantify the influence of blockiness, distinction, flicker and the sharpness of edges on the standard of the outcome as perceived by the human eye. The builders of current compressors like Google’s Guetzli encoder, a JPEG compressor that runs far slower however produces smaller information than conventional JPEG instruments, tout the truth that these algorithms contemplate essential points of human visible notion such because the variations in how the attention perceives particular colours or patterns.

However these compressors nonetheless use loss capabilities which might be mathematical at their coronary heart, just like the pixel-by-pixel sum of squares, that are then adjusted to incorporate some points of human notion.

In pursuit of a extra human-centric loss operate, we got down to decide how a lot info it takes for a human to precisely describe a picture. Then we thought-about how concise these descriptions can get, if the describer can faucet into the big repository of pictures on the Web which might be open to the general public. Such public picture databases are under-utilized in picture compression right now.

Our hope was that, by pairing them with human visible priorities, we might provide you with a complete new paradigm for picture compression.

Relating to growing an algorithm, counting on people for inspiration shouldn’t be uncommon. Think about the sector of language processing. In 1951, Claude Shannon—founding father of the sector of knowledge concept—used people to find out the variability of language to be able to come to an estimate of its entropy. Realizing the entropy would allow researchers to find out how far the textual content compression algorithms are from the optimum theoretical efficiency. His setup was easy: he requested one human topic to pick out a pattern of English textual content, and one other to sequentially guess the contents of that pattern. The primary topic would offer the second with suggestions about their guesses—affirmation for each right guess, and both the proper letter or a immediate for an additional guess within the case of incorrect guesses, relying on the precise experiment.

With these experiments plus a whole lot of elegant arithmetic, Shannon estimated the theoretically optimum efficiency of a system designed to compress English-language texts. Since then, different engineers have used experiments with people to set requirements for gauging the efficiency of synthetic intelligence algorithms. Shannon’s estimates additionally impressed the parameters of

the Hutter Prize, a long-standing English textual content compression contest.

We created a equally human-based scheme that we hope may even encourage formidable future purposes. (This project was a collaboration between our lab at Stanford and three native excessive schoolers who had been interning with the lab; its success impressed us to launch a full-fledged highschool summer time internship program at Stanford, referred to as

STEM to SHTEM, the place the “H” stands for the humanities and the human aspect.)

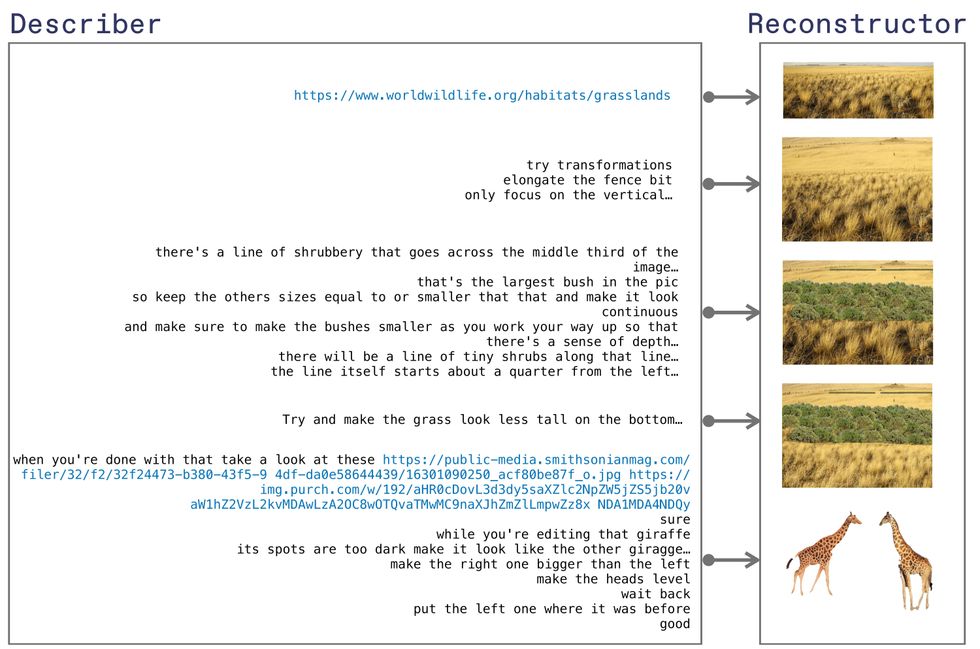

Our setup used two human topics, like Shannon’s. However as an alternative of choosing textual content passages, the primary topic, dubbed the “describer,” chosen {a photograph}. The second take a look at topic, the “reconstructor,” tried to recreate the {photograph} utilizing solely the describer’s descriptions of the {photograph} and picture enhancing software program.

In exams of human picture compression, the describer despatched textual content messages to the resconstructor, to which the reconstructor might reply by voice. These messages might embody references to pictures discovered on public web sites.

Ashutosh Bhown, Irena Hwang, Soham Mukherjee, and Sean Yang

In our exams, the describers used text-based messaging and, crucially, might embody hyperlinks to any publicly obtainable picture on the web. This allowed the reconstructors to start out with an identical picture and edit it, slightly than forcing them to create a picture from scratch. We used video-conferencing software program that allowed the reconstructors to react orally and share their screens with the describers, so the describers might comply with the method of reconstruction in actual time.

Limiting the describers to textual content messaging—and permitting hyperlinks to picture databases—helped us measure the quantity of knowledge it took to precisely convey the contents of a picture given entry to associated pictures. To be able to be sure that the outline and reconstruction train wasn’t trivially simple, the describers began with unique pictures that aren’t obtainable publicly.

The method of picture reconstruction—involving picture enhancing on the a part of the reconstructor and text-based instructions and hyperlinks from the describer—proceeded till the describer deemed the reconstruction passable. In lots of instances, this took an hour or much less, in some, relying on the supply of like pictures on the Web and the familiarity of the reconstructor with Photoshop, it took all day.

We then processed the textual content transcript and compressed it utilizing a typical textual content compressor. As a result of that transcript incorporates all the data that the reconstructor wanted to satisfactorily recreate the picture for the describer, we might contemplate it to be the compressed illustration of the unique picture.

Our subsequent step concerned figuring out how a lot different folks agreed that the picture reconstructions based mostly on these compressed textual content transcripts had been correct representations of the unique pictures. To do that, we crowdsourced through

Amazon’s Mechanical Turk (MTurk) platform. We uploaded 13 human-reconstructed pictures side-by-side with the unique pictures and requested Turk staff (Turkers) to fee the reconstructions on a scale of 1—utterly unhappy—to 10—utterly happy.

Such a scale is admittedly obscure, however we left it obscure by design. Our aim was to measure how a lot folks appreciated the pictures produced by our reconstruction scheme, with out constraining “likeability” by definitions.

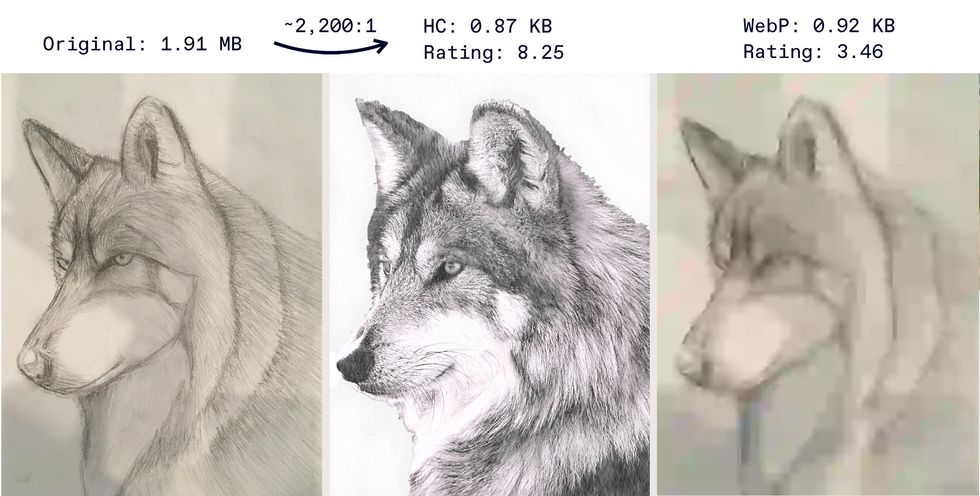

On this reconstruction of the compressed pictures of a sketch (left), the human compression system (middle) did a lot better than the WebP algorithm (proper), by way of each compression ratio and rating, as decided by MTurk employee scores.Ashutosh Bhown, Irena Hwang, Soham Mukherjee, and Sean Yang

Given our unorthodox setup for performing picture reconstruction—using people, video chat software program, monumental picture databases, and reliance on web search engine capabilities to go looking stated databases—it is practically inconceivable to instantly examine the reconstructions from our scheme to any current picture compression software program. As a substitute, we determined to check how properly a machine can do with an quantity of knowledge akin to that generated by our describers. We used probably the greatest obtainable lossy picture compressors,

WebP, to compress the describer’s unique pictures all the way down to file sizes equal to the describer’s compressed textual content transcripts. As a result of even the bottom high quality stage allowed by WebP created compressed picture information bigger than our people did, we needed to scale back the picture decision after which compress it utilizing WebP’s minimal high quality stage.

We then uploaded the identical set of unique and WebP compressed pictures on MTurk.

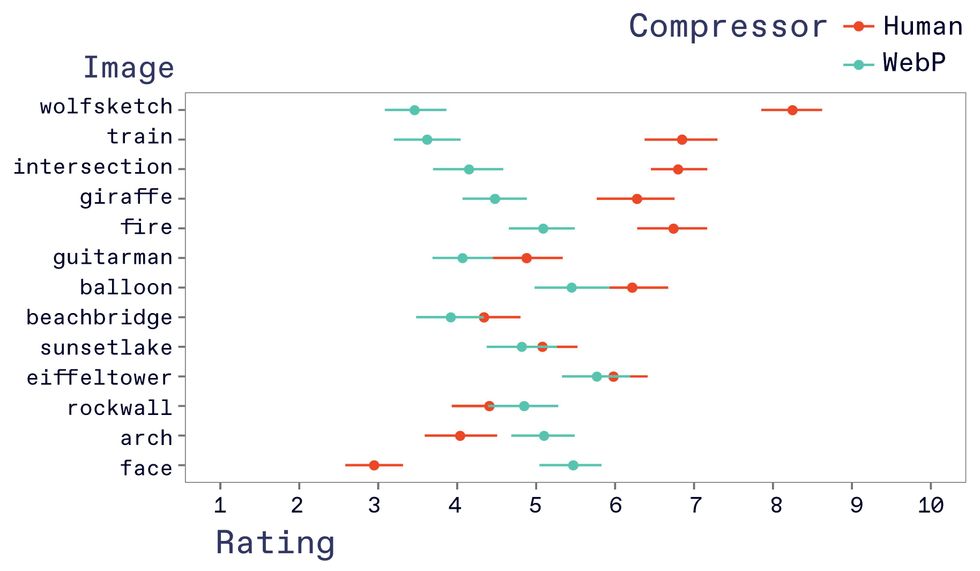

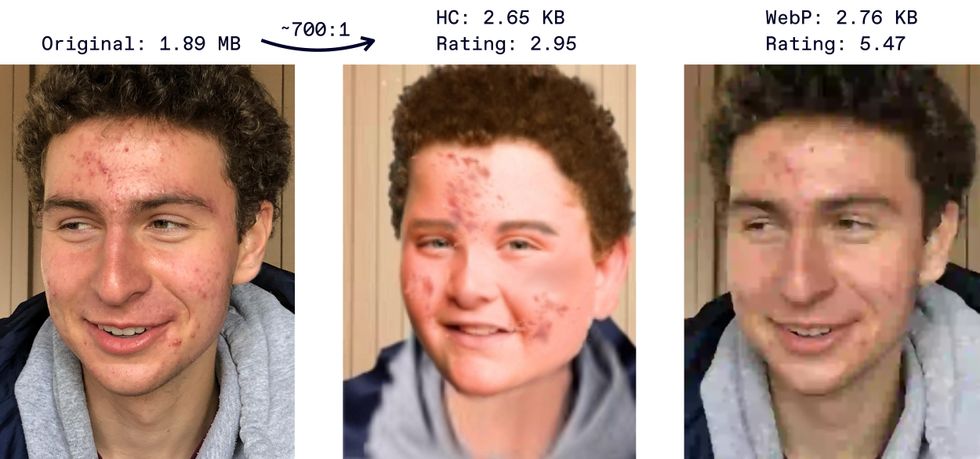

The decision? The Turkers typically most well-liked the pictures produced utilizing our human compression scheme. Most often, the people beat the WebP compressor, for some pictures, by quite a bit. For a reconstruction of a sketch of the wolf, the Turkers gave the people a imply ranking of greater than eight, in contrast with one among lower than 4 for WebP. When it got here to reconstructing the human face, WebP had a big edge, with a imply ranking of 5.47 to 2.95, and barely beat the human reconstructions in two different instances.

In exams of human compression vs the WebP compression algorithm at equal file sizes, the human reconstruction was typically rated greater by a panel of MTurk staff, with some notable exceptionsJudith Fan

That is excellent news, as a result of our scheme resulted in terribly massive compression ratios. Our human compressors condensed the unique pictures, which all clocked in round a couple of megabytes, all the way down to only some thousand bytes every, a compression ratio of some 1000-fold. This file measurement turned out to be surprisingly shut—throughout the identical order of magnitude—to the proverbial thousand phrases that footage supposedly comprise.

The reconstructions additionally supplied precious perception concerning the necessary visible priorities of people. Think about one among our pattern pictures, a safari scene that includes two majestic giraffes. The human reconstruction retained virtually all discernible particulars (albeit considerably missing in botanical accuracy): particular person bushes simply behind the giraffes, a row of low-lying shrubbery within the distance, particular person blades of parched grass. This scored very extremely among the many Turkers in comparison with WebP compression. The latter resulted in a blurred scene through which it was onerous to inform the place the bushes ended and the animals started. This instance demonstrates that on the subject of advanced pictures with quite a few parts, what issues to people is that the entire semantic particulars of a picture are nonetheless current after compression—by no means thoughts their exact positioning or colour shade.

The human reconstructors did greatest on pictures involving parts for which related pictures had been extensively obtainable, together with landmarks and monuments in addition to extra mundane scenes, like site visitors intersections. The success of those reconstructions emphasizes the ability of utilizing a complete public picture database throughout compression. Given the present physique of public pictures, plus user-provided pictures through social networking providers, it’s conceivable {that a} compression scheme that faucets into public picture databases might outperform right now’s pixel-centric compressors.

Our human compression system did worst on an up-close, portrait {photograph} of the describer’s shut buddy. The describer tried to speak particulars like clothes kind (hoodie sweatshirt), hair (curly and brown) and different notable facial options (a typical case of adolescent zits). Regardless of these particulars, the Turkers judged the reconstruction to be severely missing, for the quite simple purpose that the individual within the reconstruction was undeniably not the individual within the unique photograph.

Human picture compressors fell brief when working with human faces. Right here, the WebP algorithm’s reconstruction (proper) is clearly extra profitable than the human try (middle) Ashutosh Bhown, Irena Hwang, Soham Mukherjee, and Sean Yang

What was simple for a human to understand on this case was onerous to interrupt into discrete, describable elements. Was it not the identical individual as a result of the buddy’s jaw was extra angular? As a result of his mouth curved up extra on the edges? The reply is a few mixture of all of those causes and extra, some ineffable high quality that people battle to verbalize.

It is price stating that, for our exams, we used excessive schoolers for the duties of description and reconstruction, not educated specialists. If these experiments had been carried out, for instance, with specialists at picture description working in cultural accessibility for folks with low or no imaginative and prescient and paired with professional artists, they’d possible have a lot better outcomes. That’s, this technique has much more potential than we had been capable of display.

In fact, our human-to-human compression setup is not something like a pc algorithm. The important thing characteristic of recent compression algorithms, which our scheme sorely lacks, is reproducibility: each time you shove the identical picture into the kind of compressor that may be discovered on most computer systems, you may be completely certain that you’re going to get the very same compressed outcome.

We’re not envisioning a industrial compressor that includes units of people around the globe discussing pictures. Reasonably, a sensible implementation of our compression scheme would possible be made up of varied synthetic intelligence strategies.

One potential alternative for the human describer and reconstructor pair is one thing referred to as a generative adversarial community (GAN). A GAN is a captivating mix of two neural networks: one which makes an attempt to generate a practical picture (“generator”) and one other that makes an attempt to tell apart between actual and pretend pictures (“discriminator”). GANs have been used in recent times to perform a wide range of duties: transmuting zebras into horses, re-rendering pictures à la the preferred Impressionist kinds, and even producing phony celebrities.

Our human compressors condensed the unique pictures, which all clocked in round a couple of megabytes, all the way down to only some thousand bytes every.

A GAN equally designed to create pictures utilizing a stunningly low variety of bits might simply automate the duty of breaking down an enter picture into completely different options and objects, then compress them in accordance with their relative significance, probably using related pictures. And a GAN-based algorithm could be completely reproducible, fulfilling the fundamental requirement of compression algorithms.

One other key part of our human-centric scheme that might should be automated is, satirically, human judgment. Though the MTurk platform may be helpful for small experiments, engineering a strong compression algorithm that features an acceptable loss operate would require not solely an enormous variety of responses, but in addition constant ones that agree on the identical definition of picture high quality. As paradoxical because it appears, AI within the type of neural networks capable of predict human scores might present a much more environment friendly and dependable illustration of human judgment right here, in comparison with the opinions of a horde of Turkers.

We consider that the way forward for picture compression lies within the hybridization of human and machine. Such mosaic algorithms with human-inspired priorities and robotic effectivity are already being seen in a big selection of different fields. For many years, studying from nature has pushed ahead the whole area of biomimetics, leading to robots that locomote as animals do and uncanny navy or emergency rescue robots that just about—however not fairly—seem like man’s greatest buddy. Human pc interface analysis, specifically, has lengthy taken cues from people, leveraging crowdsourcing to create extra conversational AI.

It’s time that related partnerships between man and machine labored to enhance picture compression. We predict, that with our experiments, we moved the goalposts for picture compression past what was assumed to be potential, giving a glimpse of the astronomical efficiency that picture compressors may attain if we rethink the pixel-centric method of the compressors we now have right now. After which we really may be capable of say {that a} image is price a thousand phrases.

The authors wish to acknowledge

Ashutosh Bhown, Soham Mukherjee, Sean Yang, and Judith Fan, who additionally contributed to this analysis.

[ad_2]

Source